Study showing that GenAI tools slow software developers down

I didn’t want to publicly comment on the recent METR study, but here we go…

First, it’s interesting how loaded some responses were that the authors received. I admire how thoughtfully they responded, for example, Nate Rush in this thread.

Second, I think they were more transparent than 90% of the studies I have seen on the impact of GenAI on software development. Just look at the appendix of the paper (linked in the comments).

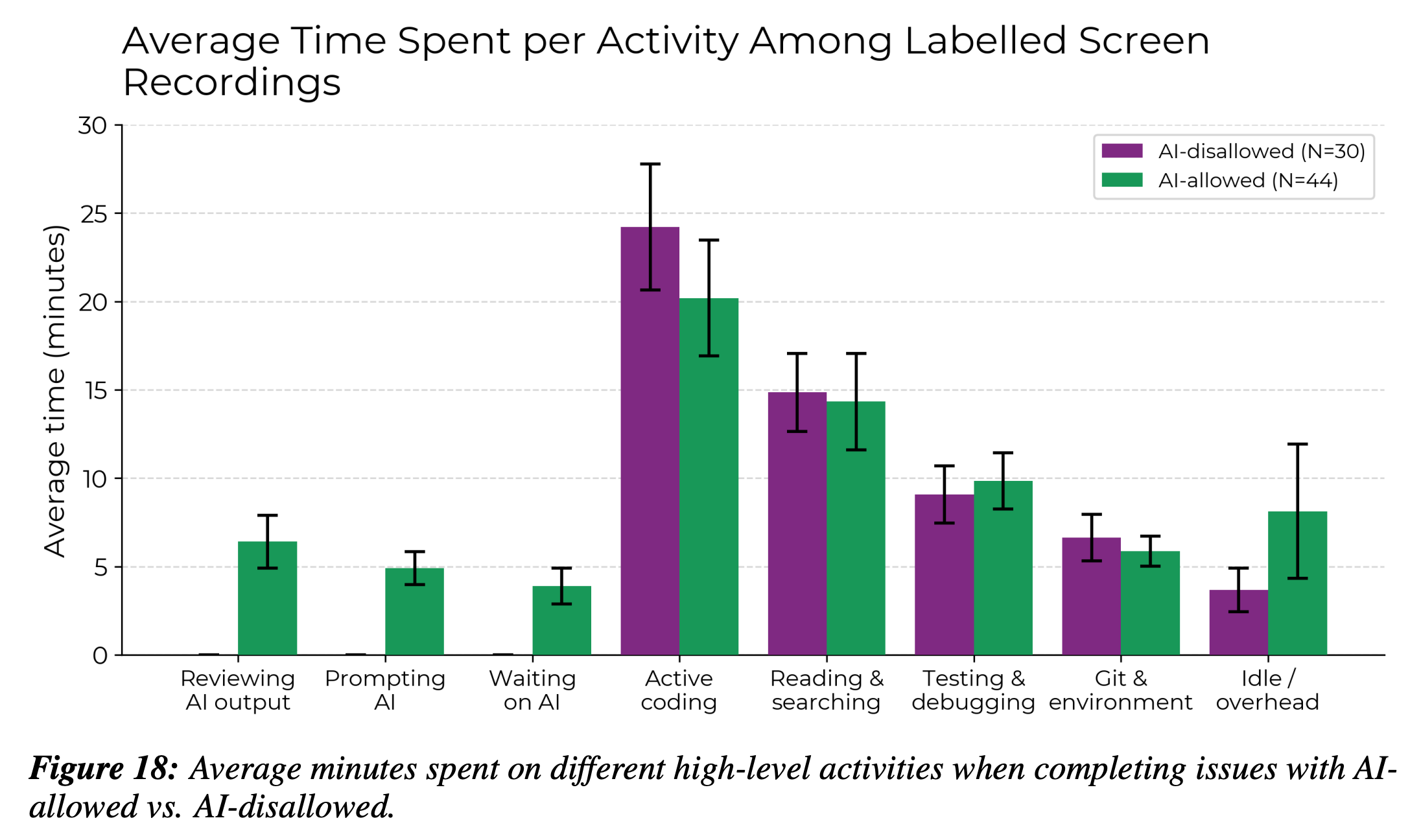

Third, the most intriguing part to me is Figure 18 (see below), in particular the first three bars and the last bar. These aspects capture additional overhead when using GenAI tools. They are important factors to consider when designing studies on the impact of GenAI tools, and I assume they are generally underrated and/or not self-reported in the questionnaire-based studies that we so frequently see. Sure, this might improve with better models and tools, but it doesn’t mean that we should ignore these aspects today.

Congrats Joel Becker, Nate Rush, Beth Barnes, and David Rein on publishing a preprint of the paper. Looking forward to seeing a peer-reviewed version.

The paper preprint is available on arXiv, the authors also published a blog post.